So I just moved to Southwest Florida from Western Kentucky. No more churches on every street corner, no more red or dead politics.

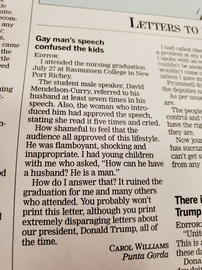

Nope, belief here is more insidious because it's subtle and far more toxic. This particular area voted for Trump by a landslide, and I happened to notice this letter written into the local paper.

What are we supposed to tell our kids about gay people?

Enjoy being online again!

Welcome to the community of good people who base their values on evidence and appreciate civil discourse - the social network you will enjoy.Create your free account

Enjoy being online again!

Welcome to the community of good people who base their values on evidence and appreciate civil discourse - the social network you will enjoy.Create your free account

Share this post

Categories

Agnostic does not evaluate or guarantee the accuracy of any content. Read full disclaimer.